HBS Combined Panel Trial Details for 2018

Details of the combined panel trial purpose and process, from the 2018 EOI guidelines.

Also available as a PDF: HBS appendix

In the 2017 Marsden Fund Investment Plan, the Council committed to trialling a new broader panel model in the 2018 Round. In a parallel assessment process, the ‘Humanities, Behavioural and Social Sciences (HBS)’ (HBS) will combine the research fields currently covered by the Social Sciences (SOC), Humanities (HUM) and Economics and Human & Behavioural Sciences (EHB) panels.

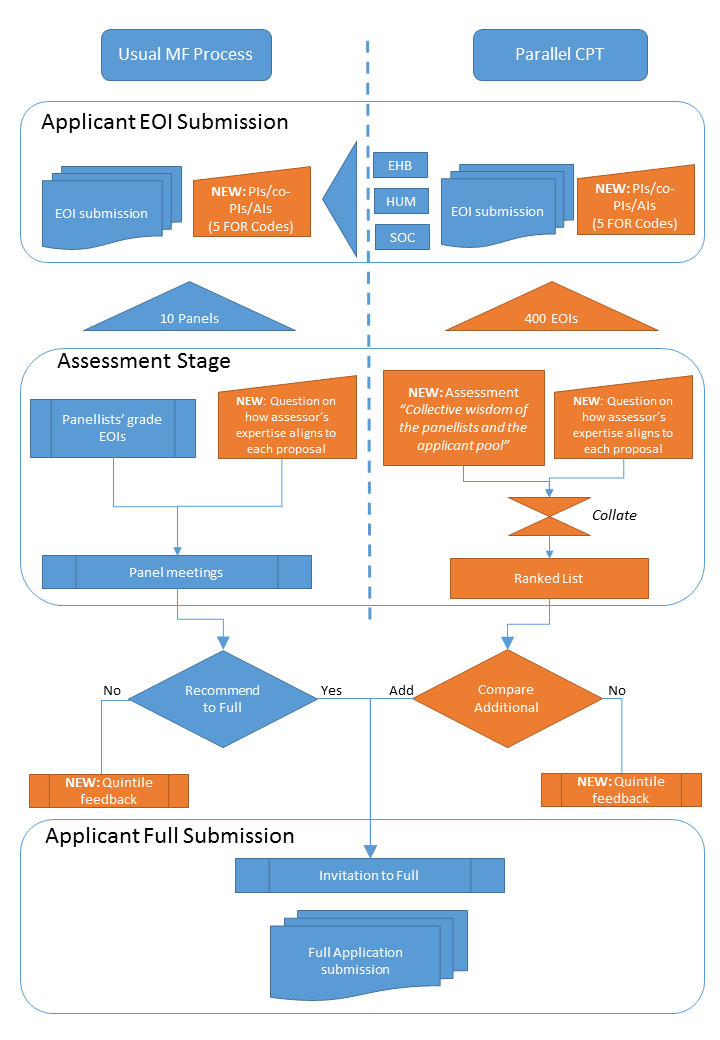

This will be a parallel process at the EOI stage only, in that applicants to the HUM, EHB and SOC panels will have their EOIs considered by those separate panels as in the current process, as well as having their EOIs assessed via a broader HBS panel. Full proposals selected by the broader panel trial will be considered in their own separate panels (EHB, HUM or SOC) in the full proposal round, as normal.

The parallel processes are being run to enable comparisons to be made between the two different assessment models.

The rationale for the broader panel trial in the Investment Plan is given below:

‘The broader panel structure is intended to increase expert availability and so achieve better consideration of interdisciplinary proposals and avoid perceptions and risk of disciplinary bias. It also allows for greater moderation between different disciplines. At the same time it decreases the fragmentation of panels and reduces the burden on individual assessors.'

Below are details of how the HBS trial is proposed to work at the EOI stage. Note that this is a trial ONLY.

Key Objectives

- Better consideration of interdisciplinary proposals

- Increase expert availability

- Manage burden on assessors

- Avoid perceptions and risk of disciplinary bias

- Allow greater moderation between different disciplines (for all panels to consider)

Proposed changes to the EHB, HUM and SOC panels —> HBS

Flow chart showing application/assessment process as it runs from selection of panellists, EOI submission though to recommendations for full round. Chart provides comparison to current process and highlights where applicants are required to provide new information. Information in chart is in text form below.

NB: CPT = Combined Panel Trial.

- EHB, HUM and SOC applications will go through TWO parallel assessment models at the EOI stage. As well as the current individual panel model, EOIs will also automatically be presented to a new combined panel of Humanities, Behavioural and Social Sciences (HBS)

- Submitting the EOI application – EHB, HUM and SOC panels

- 2.1. The contact PI identifies up to 3 Field of Research (FOR) codes that best describe where the application sits within its discipline and sub-discipline.

2.2. Submission of an EOI to the HBS panel includes an expectation that all New Zealand-based PIs/co-PIs/AIs associated with the application are potential assessors who may be asked to review applications in the current round (similar to a ‘College of Experts’ model)[1]

2.2.1.Applicants may be asked to participate as assessors at the EOI stage (except Fast-Start applicants). Assessors will be subject to the terms and conditions outlined in the Panellists’ Guidelines.

2.2.2.Applicants provide 5 Fields of Research (FOR) codes about their own research interests and expertise (note that this could well be wider than those provided in the EOI’s FOR codes).

2.2.3.Applicants will have the opportunity to identify up to 3 people whom they do not wish to assess their proposal. This information will be kept confidential and will not be seen by assessors. Note to contact PIs: If there is any New-Zealand based researcher whom you do not wish to assess your EOI, please state this, providing reasons, in a communication provided to the Society on letterhead. The deadline for this is the EOI deadline, 22 Feb 2018.

- Submitted EOIs are grouped for assessment by category as Fast-Start or Standard (FS or SD)

- Each EOI application is distributed to 10 unique assessors with an aim to find:

4.1. Six assessors who have self-identified FOR codes which are closely aligned to that of the research project

4.2. Four assessors who will be randomly assigned to a project

4.3. The intention is for each proposal to have an equal number of assessors who are discipline experts with the random assessors controlling for discipline density

- Each assessor is expected to grade between 20 and 40 EOIs

5.1. An assessor will be assigned to either FS or SD grants

5.1.1. The EOI-assessor assignment process will be automated, at least in the first pass, to best match application and assessor FOR codes. Constraints include a requirement that each assessor should assess at least 20 applications so as to allow for a sense of the distribution of scores.

5.2. The current 6-point assessment scale as used by the Marsden Fund will be retained as per the current process

5.3. Assessors will score to an ideal distribution as per the current process.

5.4. Question (for ALL panels)

5.4.1.Each assessor answers the following question for every application they assess:

‘How close do you believe you are to the research domain/discipline of the proposed research project?’

- I have expertise in the field

- I have expertise in a closely related field

- I have expertise in this general field

- I have familiarity with this general field

- I have no familiarity with the field

- Assessor scores are returned to the secretariat and compiled to a ranked list of all applications

6.1. Scores are not to be reconsidered by the assessors

6.2. This list is moderated using a trimmed average

6.2.1.We aim to receive 10 scores. The top and bottom scores are excluded in the calculation of the average across the remaining eight scores with the intention of removing outliers. This trimmed average is used to generate the ranking across all applications.

6.2.2.An example of 10 scores for three different proposals, comparing average and trimmed average scores:

Initial scores Average Trimmed average

Proposal A: 1 2 3 2 1 1 2 3 1 5 2.1 1.87

Proposal B: 4 3 3 4 5 5 6 1 4 6 4.1 4.25

Proposal C: 1 1 2 2 2 2 2 2 3 3 2.0 2.00

6.2.3.Feedback will be provided to each assessor by the provision of the final rankings after moderation (trimmed average) for comparison to their own individual score for the proposals that they contributed a score to. Scores will be provided anonymously (and by a descriptive statistic method e.g. box plot).

- A face-to-face review and recommendation meeting is held by the three convenors of EHB, HUM and SOC panels.

7.1. A review of the process takes place using data obtained during the assessment procedure. This includes assessment of the trimmed average procedure; a check for any aberrant assessor scores; monitoring of data on the discipline mix.

7.2. A cut point is set in the ranking.

7.3. Convenors will then compare successful EOIs from the current EHB/HUM/SOC process to the ranked list from the HBS trial. The convenors will look to recommend additional proposals that were successful in the HBS trial but were not successful in the EHB/HUM/SOC assessment model.

7.4. A recommendation will be made regarding which projects will go forward to the full application round.

- Recommendation is approved by the MFC whereby approximately 80-100 proposals are invited to the Full Proposal round.

- Full proposals will then go back into the current Marsden Full proposal assessment model and will be assessed in their individual panels – EHB, HUM or SOC.

How the proposed model addresses the key objectives:

- Better consideration of interdisciplinary proposals

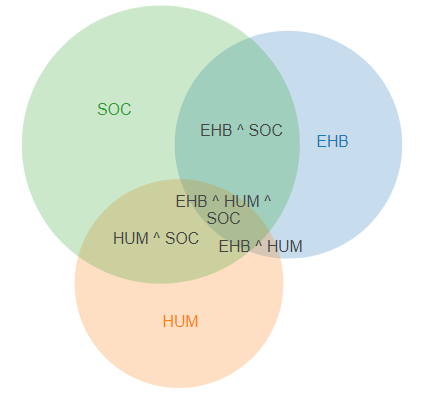

- The dynamic nature of the application-assessor assignment process allows the best assessors to be chosen from across the full range of disciplines represented in the combined pool of assessors (figure 1).

- The greatly increased size of the pool of assessors in the EOI stage will increase the likelihood that interdisciplinary applications will be assessed by experts who have familiarity within and across the different subfields.

Figure 1. FOR code overlap for HUM, SOC and EHB panels (2014-2016)

- Increase expert availability

- Current panels have a membership of 7-10 panellists. This limits the assessment expertise available for each application.

- The new HBS will be expected to draw on more closely aligned expertise in the pool of experts (from all PIs/co-PIs and AIs in the current round along with some previous panellists)

- Often only 1 to 2 people on a traditional panel may consider themselves to have expertise in the field (i.e., 12-20% of the panel) and conflicts of interest can exclude expertise from the panel; the proposed trial of HBS is expected to raise this level of expertise to nearly 60%.

- Manage burden on assessors

- The traditional panel may require each panellist to review up to 120 or more EOI proposals. The HBS combined panel trial will reduce individual assessor burden by requiring a maximum of 40 EOI applications per assessor.

- The model scales up, as an increase in application numbers produces an increase in potential assessors.

- Avoid perceptions and risk of disciplinary bias

- A larger pool of experts to draw from allows for the reduction in perceived disciplinary bias as a proposal is able to be assigned to a more closely related peer for their assessment. The addition of the random assessor component is a counter to any advantage that an application might gain through being in an area of high discipline density within the assessor pool.

- Allow greater moderation between different disciplines

- The use of the larger pool of assessors effectively allows for greater moderation across and between different disciplines. The use of trimmed average procedure limits the impact of any outliers during the assessment process.

- The review and recommendation step in HBS allows for an overview of data across the whole domain. The moderation group will form a view of the process and make recommendations to Council on whether all disciplines are broadly supported.

[1] For the background of where this model came from: https://www.nsf.gov/nsb/publications/2016/nsb201641.pdf