Research

Published 16 January 2025Changing realities: Editing 360° scenes to make immersive virtual worlds

Dr Fanglue Zhang and Professor Neil Dodgson of Te Herenga Waka—Victoria University of Wellington are developing methods to convincingly edit Mixed Reality environments

Imagine stepping into a virtual world where you can watch animals gather at a watering hole in the Serengeti National Park, or cheer on your favorite football team from the stands. This is just a taste of what Mixed Reality (MR) experiences can offer. MR experiences blend real-world and virtual elements, and have a multitude of possible applications, particularly in entertainment and training.

Panoramic videos are easy to produce and represent a cost-effective way of generating rich environments for MR experiences. However, adding convincing virtual objects to real-world panoramas remains a challenge, since these objects need to look realistic from different viewing angles.

In their Marsden Fast-Start project, Dr Zhang (an expert in image and video processing and computer graphics) and Associate Investigator Professor Dodgson (an expert in 3D display technology, image processing algorithms and visual perception) are developing methods that can adapt dynamic, single-camera panoramic videos into more immersive environments for MR applications. Using advanced deep learning techniques, the team - including PhD students Kun Huang, Simin Kou and Yujia Wang, Masters student Yiheng Li and US collaborator Dr Connelly Barnes - has developed several novel methods to edit 360° scenes for MR applications.

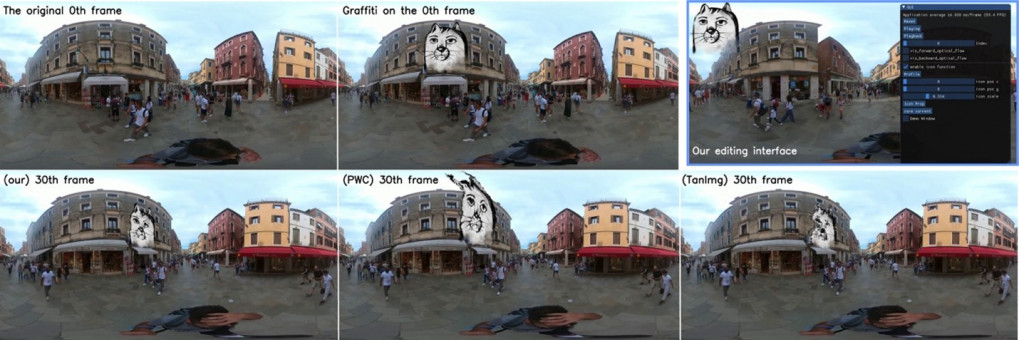

One of the challenges of adding new objects into 360° panoramic environments is that they must maintain their position relative to other parts of the scene as the viewing angle changes and time passes. The way that objects, surfaces and edges appear to move through space is known as “optical flow”. Unfortunately, existing computational methods are often inaccurate when it comes to estimating optical flow in 360° scenes, leading to unnatural movement of objects and loss of immersion. To solve this problem, Dr Zhang and Professor Dodgson’s team have developed a “multi-projection-based” approach of computing optical flow - this combines predictions from multiple single-projection models, and results in 40% more accurate estimations of optical flow in MR panoramic scenes. This approach can be used to track object movement, but also to improve the temporal and spatial consistency of virtual objects edited into MR panoramic videos (Figure 1).

Figure 1: Dr Zhang and Professor Dodgson’s multi-projection-based approach to predicting optical flow can be used for video editing. Using an inserted graffiti element as a test case, the team’s approach (lower left-most panel) is more temporally consistent than existing single-projection methods (such as PWC and Tangent image-based models, or TanImg) – as the viewing angle moves over time, the graffiti also shifts to maintain its position and angle relative to the rest of the scene (figure supplied)

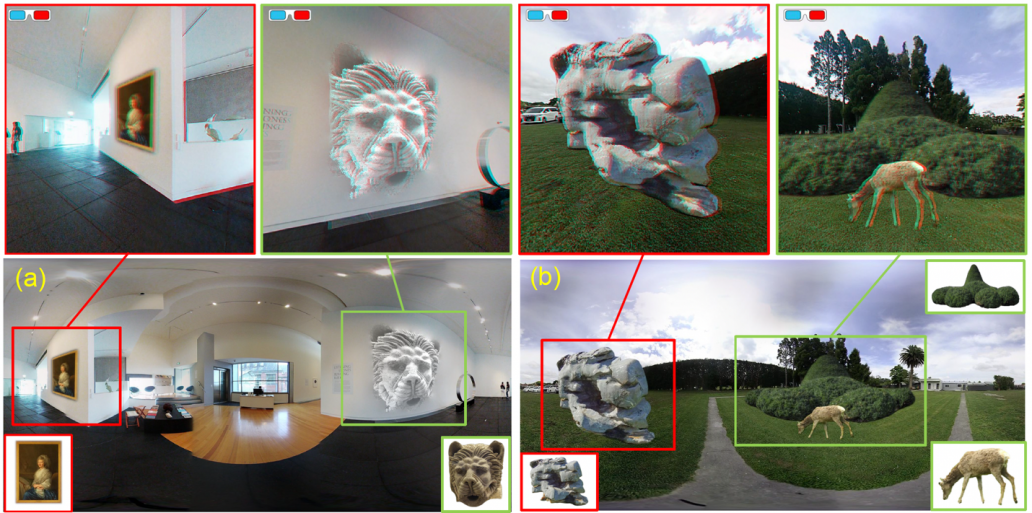

Another challenge for panoramic MR environments is maintaining correct depth perception. “Anaglyphs” are pairs of overlapping images that appear 3D when viewed through specific filters (like the red-blue glasses once used for 3D movies). Unfortunately, this 3D effect breaks down if the anaglyph is viewed from a different angle than intended, breaking immersion when anaglyph objects are added into panoramic MR scenes. Dr Zhang and Professor Dodgson’s team have come up with a view-dependent projection method that improves depth perception of inserted anaglyph objects, making them appear more real within the scene (Figure 2). A short video explainer of this work can be found here.

By addressing some of the challenges of building and editing 360° MR environments, Dr Zhang and Professor Dodgson’s team hope that their work will contribute to the development of next-generation MR and metaverse applications that will transform the way we create and experience virtual worlds.

Figure 2: The team’s proposed stereo image composition method provides depth perception adapted to the user’s view direction. The top panel depicts objects inserted into scenes (a) and (b) which should appear to have appropriate depth if viewed using 3D anaglyph glasses (figure supplied)

RESEARCHER

Dr Fanglue Zhang

ORGANISATION

Te Herenga Waka—Victoria University of Wellington

FUNDING SUPPORT

Marsden Fund

CONTRACT OR PROJECT ID

VUW2018: 'Reconstructing Dynamic Panoramic Scenes in Mixed Reality'